" If you find that you’re spending almost all your time on theory, start turning some attention to practical things; it will improve your theories. If you find that you’re spending almost all your time on practice, start turning some attention to theoretical things; it will improve your practice. "

-Donald Knuth

Why not Walk the AST ?

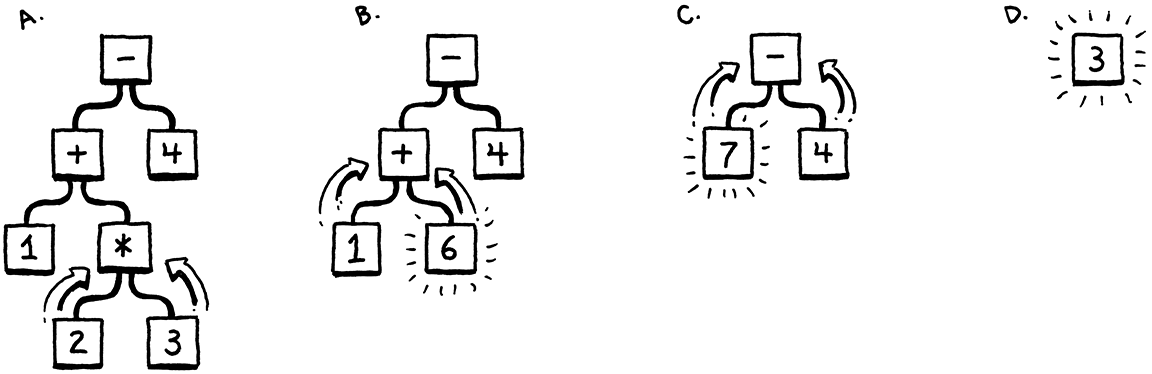

The inefficiency arises from the way memory is utilized. Each bit of code syntax is transformed into an AST node, resulting in a multitude of objects linked together with numerous pointers. Even a simple expression like '1 + 2' generates a cluster of objects with interconnections.

These pointers add extra overhead of 32 or 64 bits to each object, dispersing data across the heap in a disjointed structure, which adversely impacts spatial locality.

Modern CPUs operate far more rapidly than their capability to retrieve data from RAM. To address this, processors incorporate multiple tiers of caching. When data is present in the cache, retrieval occurs significantly faster—sometimes up to a hundred times faster.

The mechanism that fills this cache involves speculative inclusion by the machine. Essentially, when the CPU reads data from RAM, it pulls in a set of adjacent bytes, storing them in the cache. If subsequent data requests align with these cached lines, the CPU operates efficiently like a well-coordinated assembly line.

To optimize cache utilization, the way code is stored in memory should mimic a dense and orderly structure, facilitating sequential reading.

However, the tree-like structure of the AST doesn't conform to this efficiency requirement. The dispersed nature of sub-objects within the tree causes inefficiencies. Traversing the tree often leads the CPU to step outside the cache bounds, necessitating a stall until new data is fetched from RAM. The overhead generated by these tree nodes, with their numerous pointers and object headers, further distances objects from each other, hindering cache performance.

Why not compile to native code?

To achieve maximum speed, it's essential to eliminate layers of indirection. This involves reaching down to the hardware level—machine code. The concept itself implies rapidity and directness.

The fastest programming languages compile directly into the native instruction set supported by the chip. This method has remained the most efficient since the early days when programmers manually crafted programs in machine code.

Writing machine code, often done on punched cards back in the day, was an intricate process. Native code is a concise sequence of operations represented in binary. Each instruction is typically between one and a few bytes in length and operates at an incredibly low level, involving commands like moving values between memory addresses and performing arithmetic operations on registers.

The CPU executes these instructions sequentially, without any tree structure as seen in our AST. Control flow is managed by direct jumps from one code location to another, devoid of indirection, overhead, or unnecessary pointer manipulation.

However, this lightning-fast performance comes with a price. Compiling code to native instructions is an arduous task. Most widely used chips have complex architectures with a multitude of instructions accumulated over decades, requiring sophisticated techniques for register allocation, pipelining, and instruction scheduling.

Moreover, opting for native code sacrifices portability. Mastery of one architecture merely grants access to one of several prevalent instruction sets. To support multiple architectures, one must delve into and write separate back ends for each unique instruction set—a time-consuming and challenging endeavor.

What is Bytecode?

Consider two ends of a spectrum: a tree-walk interpreter, which is straightforward, adaptable, and sluggish, versus native code, which is intricate, tied to specific platforms, and swift. Bytecode resides in the middle ground, preserving the portability of a tree-walker while sacrificing some simplicity for a performance boost, though not matching the speed of native code.

Structurally, bytecode mirrors machine code. It consists of a compact, sequential arrangement of binary instructions, reducing overhead and aligning favorably with cache behavior. However, it operates as a much simpler, higher-level instruction set compared to real-world chip architectures. (In many bytecode formats, each instruction is a single byte in length, hence the term "bytecode.")

If you were tasked with designing the simplest architecture for a native compiler from a source language, bytecode embodies that notion. It represents an idealized, conceptual instruction set that facilitates the compiler-writing process.

The challenge with a conceptual architecture is its non-existence in reality. To address this, an emulator comes into play—an emulated chip designed in software to interpret the bytecode instruction by instruction. This emulation creates a virtual machine (VM) framework.

The overhead introduced by this emulation layer is a primary reason why bytecode execution is slower than native code. However, in return, it bestows upon us the gift of portability. By crafting our VM in a language like C, which is already supported across the machines we're interested in, we gain the ability to execute our emulator on a variety of hardware platforms.